By Rebekah Bostan, Parul Wadhwa, and Jo Stansfield

Have you ever wondered what you can do, as an individual, to foster digital inclusion in your organisation?

Colleagues are not powerless in the adoption of AI by their organisations and are likely to play a vital role in ensuring inclusive adoption and usage. To help you Making Chance in Insurance (MCII) and Jo Stansfield have put together a list of actions individuals can take to support inclusive digital transformation and AI adoption.

AI will transform all types of businesses and is transforming insurance by increasing personalisation, reducing operating costs and supporting new product development, but we need to ensure that the adoption is as inclusive as possible at all stages of development. This includes the design, development, deployment and use of the technology and importantly its overall governance. In particular, we need to ensure that we do not end up excluding some groups and deskilling others given the current speed of digital transformation and AI developments.

“Technological progress has to be designed to support humanity’s progress and be aligned to human values. Among such values, equity and inclusion are the most central to ensure that AI is beneficial for all.”

You may or may not have direct influence in all the areas highlighted, but either way, we think you should know what best practice looks like and know where possible you can influence the development of more inclusive digital adoption and implementation.

1 )🚨Get your learning hat on – you are not a helpless bystander in the accelerated adoption of AI.

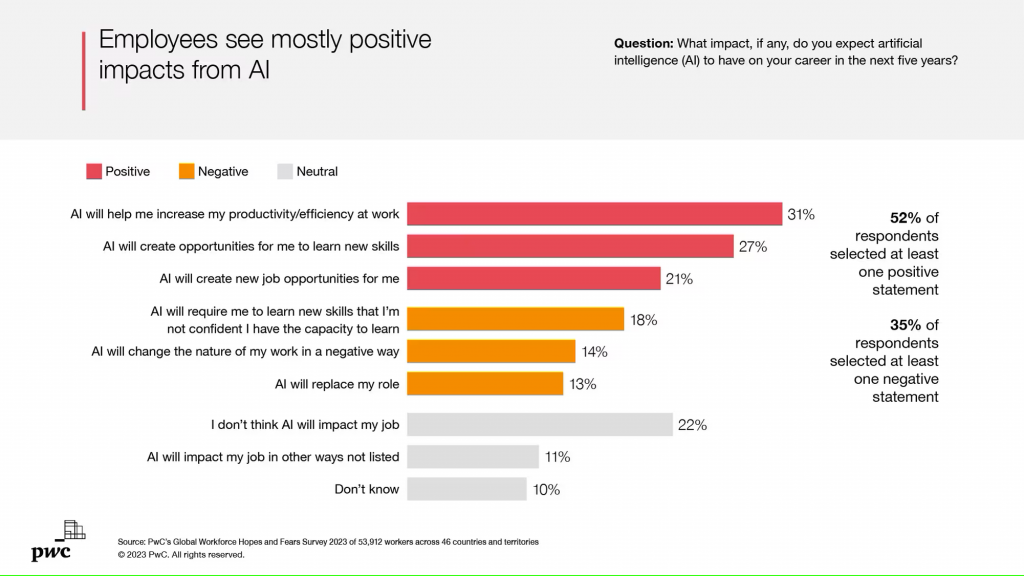

AI, and especially generative AI, provides a direction/co-pilot and even tools to undertake our work more efficiently, but humans still have a responsibility for how it is used. The June 2023 PWC Workforce Hopes and Fears Survey found that 57% of respondents felt that AI would positively support them in acquiring new skills or improving their productivity. Unfortunately, most organisations are not yet providing sufficient staff training, so it is important for individuals to start engaging with technology, such as generative AI, themselves.

Some great personal upskilling tools we have found include:

- Google’s Generative AI Learning Path (10 Courses)

- Microsoft’s Transform Your Business With AI Course

- LinkedIn and Microsoft’s Career Essentials In Generative AI Course

- Digital Partner’s Fundamentals of ChatGPT Course

- Phil Ebner with Udemy’s AI Crash Course

- Coursera’s Prompt Engineering for ChatGPT Course

- Google’s Generative AI Learning Path (10 Courses)

| ACTION: ⚡Commit to upskilling yourself – make a weekly plan |

2) 🎯Recognise the Automation Bias and value the need for a ‘human-in-the-loop’

Checks and balances are vital to minimise humans’ over-prioritising automated decision-making system results over their own judgement, especially as automation is only likely to increase.

Be aware of the human tendency towards “automation bias”, which means we place trust in automated systems, even when there may be information available that demonstrates they may not be trustworthy. Consequently, we may follow suggestions or actions by the system uncritically and fail to take into account other sources of information. Alternatively, we may fail to notice warning signs from the system because we are not monitoring it with sufficient diligence.

| ACTION: ⚡Be aware of the risk of falling for automation bias. In your interactions with AI systems, be diligent in monitoring the system, know its limitations, and seek other sources of information to verify the outputs. ⚡Find out what checks and balances your organisation is putting in place to minimise automation bias – and where you find these may be lacking highlight the issue. |

3) 🌈 Accept that inclusive digital transformation requires multiple layers of defence (starting with technical operations and oversight, then moving to audit)

Organisations have three main layers of defence to ensure inclusive digital transformation. It is likely that as an individual, you will be directly or indirectly involved in one of these layers of defence. Taking time to understand what your organisation is doing to support these defence layers will help you to understand where there might be gaps and or ineffective defences.

| ACTION: ⚡ Be willing to ask challenging questions about your organisation’s barriers of defence, whatever your level in an organisation. Here are some suggestions: Technical operations: How are we assessing that the system is operating as intended on an on-going basis, and managing the risk of unintended consequences? Oversight and accountability: What is our data ethics framework for how to use data appropriately and responsibly? If you are part of an ethics committee, ask: Do we have sufficiently diverse inputs represented across technical and data ethics skillsets, and across our stakeholder community? Here are some great resources to learn more about data ethics: McKinsey article on Data ethics ForHumanity article on the Rise of ethics committees Audit Do we have sufficient transparency and maturity of approach to enable an effective audit? |

4) 📲 Minimise Garbage in – Garbage out

AI has the risk of reinforcing our own prejudices because its outputs can only be as good as the data they learn from, which often includes inherent human biases. However good the machine learning model, with poor quality data inputs, the model will learn incorrectly and produce poor quality results. “Garbage”, or poor quality data, can include inaccurate data that is a poor representation of its target, incomplete data, inconsistent data, and data that is not valid for the purpose it is being used for.

| ACTION: ⚡Support the reduction or even elimination of data biases by being a strong advocate for data testing and evaluation of training data and ensuring that systems are put in place for continuous monitoring. ⚡Report adverse incidents, don’t assume others will, and if your organisation doesn’t have a reporting system in place, advocate for that. |

5) 🛡️Understand why protecting your and other people’s data is important

We live in a world where websites and other services are continuously requesting access to our data, and most of the time we provide it unthinkingly. Our data has become like an alternative form of payment, a cost to get access to some service.

But we don’t necessarily need to click “yes” to get that access, and there are good reasons why we should keep our personal information private. Keeping your information private helps to protect against identity theft, keeps your financial information safe and protects you from possible discrimination.

This also matters when we are entrusted to work with other people’s data – and this includes in the context of AI systems that process data about people. In the UK under GDPR, people have a set of rights, including being informed about the collection and use of their data, the right to object, and the right not to be subject to a decision based solely on automated processing.

| ACTION: ⚡Read your organisation’s Privacy Policy and understand how you can report misuse ⚡Learn about your rights and responsibilities when it comes to personal data – you can find a great resource here. |

6)💡Support successful adoption and usage of AI by fostering cross-organisational intergenerational and intersectional dialogues

Intergenerational and intersectional dialogues on adoption and usage are important to enable effective and inclusive adoption. Activating cross-organisational information and skill exchanges can reduce uncertainty and bias to ensure a more successful adoption of AI. This can include Reverse Mentoring, where younger employees can teach senior staff about new technologies and use cases. Intersectional workshops are another important way to bring together employees from different age groups and communities to solve business challenges, including inclusive AI adoption.

| ACTION: ⚡Look for opportunities to enrich your understanding of the impact of AI usage and adoption by calling for intergenerational and cross-organisational dialogues within your organisation. |

7)🛠️ Increased tech-focused hiring is likely to exacerbate existing diversity gaps and even create an ‘age gap’ – counter this by advocating for colleague upskilling

Whilst organisations are likely to substantially increase their tech-focused hiring over the next few years, the scale of need means that existing staff will also need to be upskilled/reskilled. McKinsey notes that hiring an employee can cost up to 100% of their annual salary but upskilling/reskilling costs under 10%. A focus on upskilling existing employees is also likely to support the closure of diversity gaps, as pure tech hires tend to still be less diverse than the general population. Reskilling can also prevent an ‘age gap’ appearing where older workers are effectively deskilled and prematurely exit organisations.

| ACTION: ⚡Advocate for more AI upskilling and reskilling opportunities across all departments and levels so no one is left behind. |

MAKING CHANGE IN INSURANCE

We are an inclusive and supportive group of insurance and technology-focused change makers who have regular challenging conversations about diversity, equity and inclusion. We then share our insights with the wider insurance and tech community. We would love you to join us at our next event – please contact Rebekah Bostan, Caroline Langridge, Areefih Ghaith or Parul Wadhwa for further information.

The above action plan was influenced by an MCII discussion in October 2023 where we explored actions individuals can take to foster inclusive AI adoption and digital transformation within organisations.